Shanghai Supercomputer Center (SSC) has always been working to provide computing services for the national and Shanghai’s public utilities. Over the years, performing numerous numerical simulation analysis for climate, meteorology, ocean, environment, etc., SSC has met the computation needs of accurate weather forecast, pollutant disperse, ocean tide forecast, flood season disaster mitigation and disaster prevention, etc., and also supported a number of large-scale, long-term, wide-benefit, and far-reaching public utilities projects, leading to continuous improvement of public utilities and good social benefits. The main users are Shanghai Meteorological Service, Shanghai Water Authority, Shanghai Environmental Monitoring Center, Ocean University of China, etc.

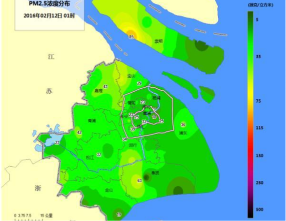

Environmental Monitoring

Deploying an air quality forecast system on its supercomputers, Shanghai Supercomputer Center has been providing high performance computing and data storage services for Shanghai Environmental Monitoring Center since 2016. Also, in order to ensure the safety and rapidness of data transmission, Shanghai Supercomputer Center has established a set of physically dedicated network lines (one active and one standby) with a network bandwidth of 1Gbps directly connecting Shanghai Environmental Monitoring Center. In addition to daily air quality forecasts, this system also undertakes special refined forecasts during special periods. For example, during the yearly Shanghai International Import Expo, the air quality forecasts in the Yangtze River Delta region would be further refined.

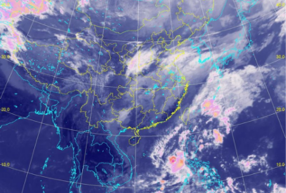

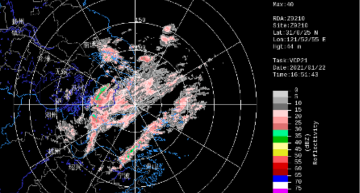

Meteorological Service

Deploying the Shanghai Numerical Weather Forecast System on its supercomputers, Shanghai Supercomputer Center has been providing high performance computing and data storage services for Shanghai Meteorological Service since 2019. Also, in order to ensure the safety and rapidness of data transmission, Shanghai Supercomputer Center has established a set of dedicated network lines with a network bandwidth of 10Gbps directly connecting Shanghai Meteorological Service. This numerical weather forecast system can cover 3 square kilometers as a whole and 1 square kilometers for a local site.

Based on years of experience in the construction, management, operation, maintenance, service of computer cluster systems, Shanghai Supercomputer Center has developed a complete solution of cluster computing platform. The solution includes infrastructure, hardware architecture, system environment, software resource, application platform, operation and maintenance system, etc., which can realize the rapid building, orderly managing, and efficient applying of cluster computing platform, promote the deep integration of high performance computing resources and R&D requirements, and quickly transform computing power into innovation ability.

System Operation and Maintenance

System and Job Scheduling Optimization:

File and Storage System Optimization:

Computer cluster system building for an automobile enterprise

Business scenario: large-scale internal computing

Customizing services such as computer cluster building, platform development, applications integration, scheduler optimization, and operation & maintenance support to help the enterprise effectively manage and monitor its high performance computing resources, integrate computing resources and R&D needs, and improve the efficiency of computing resources.

Construction of a high performance computing platform for a branch center of Shanghai Supercomputer Center

Business scenario: large-scale internal and external computing

Customizing services such as computer cluster building, platform collaboration, applications integration, scheduler optimization, and operation & maintenance support to help the enterprise build high performance computing cloud platform and realize the integrated scheduling of internal and external computing resources.

With profound experience in high performance computing service platform development and parallel software development, the R&D team of Shanghai supercomputer center (SSC) provides service in HPC platform development, software parallelization, software performance evaluation and tuning, heterogeneous platform transplantation, computer cluster evaluation, parallel computing training, etc. Presently, for different application scenarios, SSC has built several high performance computing cloud platform products, such as Xfinity, HPCPlus and IMSCloud, to make using high-performance computing resources more convenient.

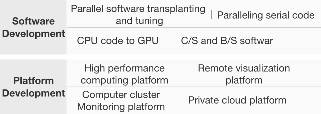

- Parallel software

transplanting

and tuning - Paralleling

serial code - CPU code

to GPU - C/S and

B/S softwar

Development

- High performance

computing platform - Remote visualization

platform - Computer cluster

Monitoring platform - Private cloud

platform

Established in 2006, HPC Lab of Shanghai Supercomputer Center (SSC) is committed to improving SSC's HPC technology, exploring and practicing new HPC solutions, and providing technical support for R&D project and user service.

Cases

In order to support building the productive artificial intelligence platform of Shanghai Supercomputer Center, HPC Lab tested the feasibility of related technologies, and verified the deploying and performance of typical applications on the P100 AI experimental platform.

In order to explore and test AI related technologies, HPC Lab built an AI experimental platform with NVIDIA Tesla P100 hardware and training frameworks such as Caffe, TensorFlow, MXNet, Torch, CNTK, and Theano. Users can use the NVIDIA Deep Learning GPU Training System (DIGITS) to develop on it. Based on this P100 AI experimental platform, HPC Lab deployed a deep learning framework and further explored related technologies. Also, on this platform, HPC Lab explored and tested Kubernetes container management and monitoring, log data dynamic management, and the combination of container and SLURM scheduling system.

In order to support the selection of next supercomputer of Shanghai Supercomputer Center, collaboratiing with Intel and AMD, HPC Lab deployed Intel SkyLake Gold 16-core processor-based servers and AMD EPYC 32-core processor-based servers, and selected typical HPC applications to test and compare the performance of the processors.

In order to provide remote visualization service, HPC Lab used virtualization software VMWare Horizon View combined with NVIDIA Tesla M60 and GRID 2.0 to build a virtual visualization testing platform. On this platform, HPC Lab tested the smoothness of operation, image quality, network bandwidth occupation, GPU utilization, etc. for the pre- and post-processing of engineering computing software under different schemes, and evaluated the overall usability of the visualization platform.

HPC lab conducted data analysis benchmark tests on R-based data analysis platforms. The tests cover the performance of open source R packages calling different mathematical libraries, the performance on CPU + GPGPU coprocessor platform and CPU + Intel Xeon Phi coprocessor platform, etc., which provide a reference for R users to improve performance.

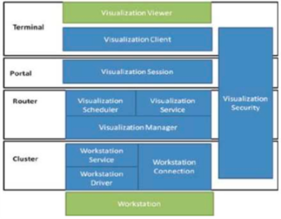

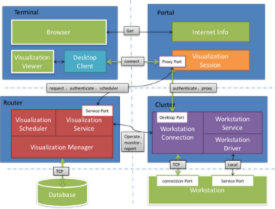

Users:want to remotely complete visualized pre- and post-processing with relevant software before and after calculation, and thus reduce data transfer, exempt from local application installation and configuration, and improve the efficiency of pre- and post-processing

System administrators:want to integrate and coordinately schedule scattered graphics workstations to provide one-stop usage, which makes IT architecture more flexible.

Customized visualization platform: on-demand

Integrating a variety of remote visualization applications, dynamic managing and scheduling graphics workstations, and dynamic publishing and managing graphics software, to realize a collaborative remote visualization management and scheduling platform.

platform

Features

General visualization platform: specified optimization

Integrating commercial visualization software with years of experience in application tuning, license management and integration, and hardware virtualization, to realize a HPC-specified remote visualization solution.

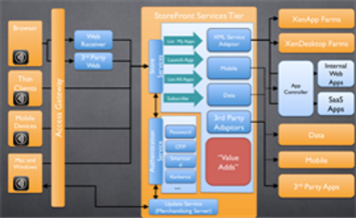

Citrix

As a virtualization solution for the Windows platform, Citrix can transform Windows desktops and applications into on-demand services that users can access from any device, anytime, anywhere.

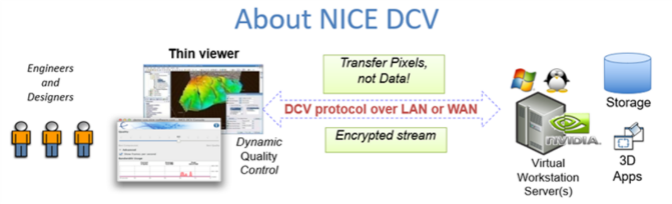

NICE DCV

NICE DCV (Desktop Cloud Visualization) is a lightweight remote display protocol. It allows users to remotely run 3D and graphics-intensive applications on Linux or Windows virtual desktops over varying network conditions, making full use of the functions and features of the remote NVIDIA GPU server. NICE DCV is easy to install and use, and supports various GPU virtualization technologies, including NVIDIA vGPU and NVIDIA GPU pass-through.

Remote visualization services can be widely used in engineering design, pre- and post-processing of simulation, mobile office and other aspects with its high-definition user experience, being available anywhere and adapting to any network.

Engineering design

With its flexible availability, high-quality use experience and other powerful advantages, data visualization enables users from anywhere to deduce multiple design solutions in advance, create excellent visualization, and easily carry out collaborative design and on-site delivery.

Pre- and post-processing of simulation

Combined with simulation applications, it can provide CAE/CFD engineers with a powerful visual environment for pre- and post-processing in finite element analysis, fluid analysis, and multi-body system simulation, which constitutes a full-process and full-cycle simulation service.

Mobile office

Supporting various terminals and operating systems, it provides flexible, efficient, and secure access for enterprise mobile office which enables users to manage and communicate without any time and place restrictions, and effectively improve work efficiency.

Upon Magic Cube III supercomputer, Shanghai Supercomputer Center builds an open environment with key elements including “data, algorithm, computing power”, and forms a full-life-cycle service capability of data cleaning, data labeling, model development, model training and applications deployment, to promote the integration of scenarios and intelligent computing power and create a new ecosystem of open intelligent computing.

Features:

SSC Artificial Intelligence Algorithm Platform

SSC Artificial Intelligence Algorithm Platform consists of 16 GPU servers. Each server has 2 Intel Xeon Gold 5118 CPUs, 4 NVIDIA TESLA V100 GPU cards and 192GB memory. The platform integrates cloud computing and big data services to provide one-stop services including data cleaning and data labeling for image, voice and other data. Also, based on the creation engine of NLP ontology, the platform provides high-quality knowledge management services for NLP applications in different fields, including ontology-based intelligent word segmentation, knowledge graphs and semantic search.